Josh represents 49 people in 17 topics

Represents 1 person in

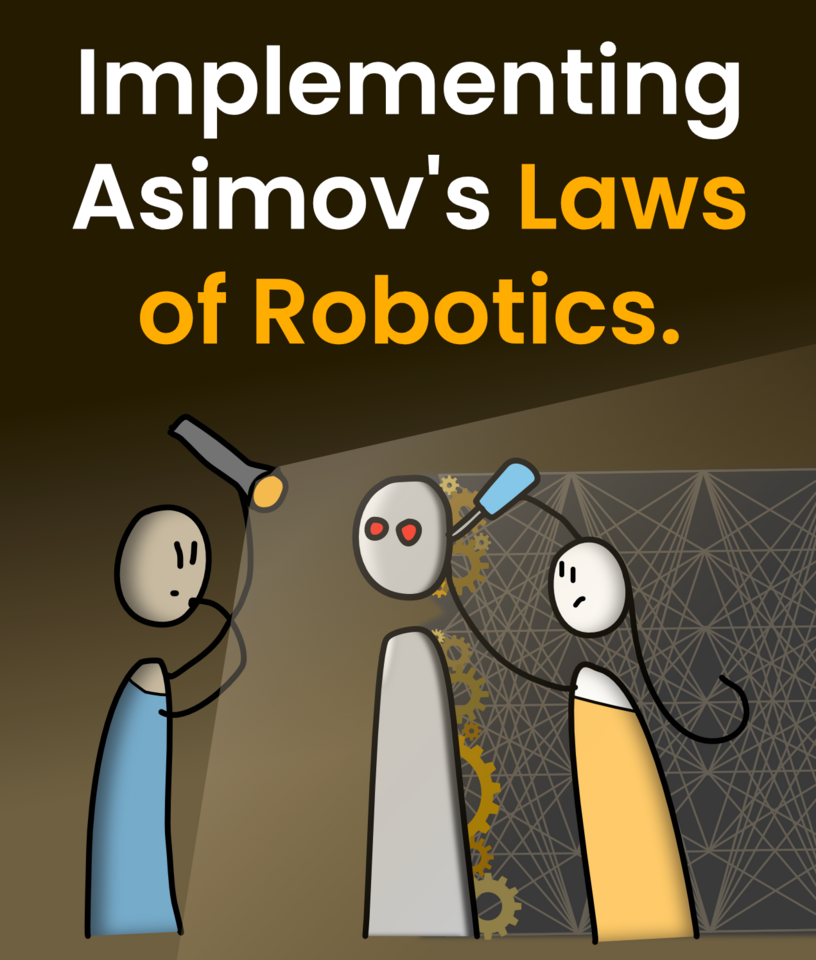

Implementing Asimov’s Laws of Robotics (The first law) - How alignment could work.Represents 4 people in

The ASI Condom - Artificial Superintelligence Safety WrapperRepresents 1 person in

Collaborative Summary of the ‘sparks of Agi’ Paper - Gpt4Represents 2 people in

The Dynamics of Traditional HierarchiesRepresents 1 person in

A story about creativity and feedbackRepresents 1 person in

Transformers - Attention based networksRepresents 1 person in

Interpretability of AIRepresents 5 people in

Are Large Language Models Glorified Auto-text? Where Does LLMs Intelligence Fail?Represents 2 people in

MidFlip: Iterating in publicRepresents 7 people in

AI & Over OptimizationRepresents 1 person in

How Artificial Intelligence worksRepresents 7 people in

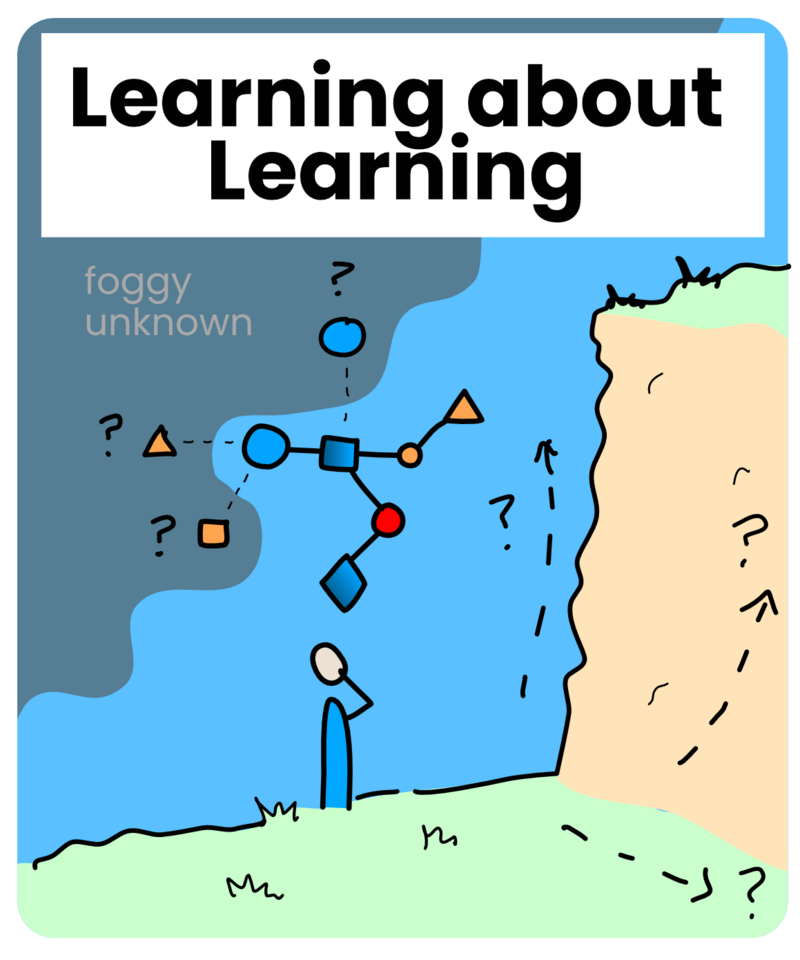

Learning about LearningRepresents 1 person in

Open-sourced Morality Training EnvironmentsRepresents 5 people in

Memetics - Ideas are aliveRepresents 3 people in

How to control your emotions. A Game theory perspective.Represents 4 people in

Mitigating the Potential Dangers of Artificial IntelligenceAdd friend

Josh's awards

Created 20 edits

Created 5 posts

100 merit award

20 merit award

5 Champ texts won in the Mitigating the Potential Dangers of Artificial Intelligence Topic

4 Champ texts won in the MidFlip: Iterating in public Topic

2 Champ texts won in the Tea Time 🍵 Topic

2 Champ texts won in the AI & Over Optimization Topic

2 Champ texts won in the How Artificial Intelligence works Topic

2 Champ texts won in the Testing Lalalala Topic

1 Champ text won in the Inflation Watch 🏰 Topic

2 Champ texts won in the Learning about Learning Topic

I am interested in design, psychology, game theory, information theory and networks. My goal is to create systems which connect people in a way so that they can collaboratively understand a subject, collect information, and solve large communal problems.

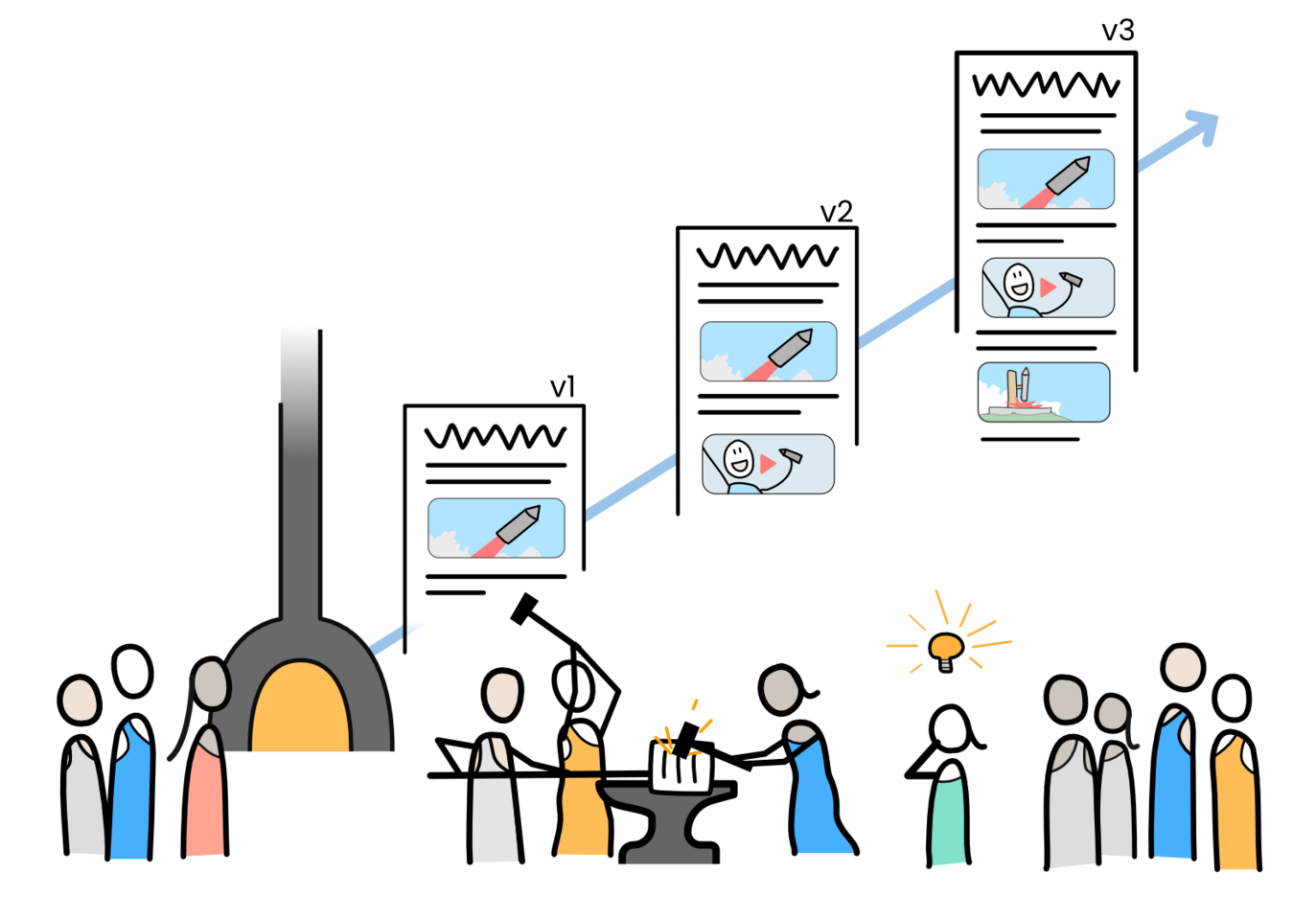

I am currently CEO and cofounder of Midflip. MidFlip is a social wiki, where your favorite topics are improved by the crowd.

For each topic, the crowd forges and collects the best videos, images, and explanations. You can cooperate and compete to build the wiki, or you can simply post your own blogs, content, and stuff.

Wish me luck, we are currently launching MidFlip beta! We are dedicated to an iterative strategy, where we improve MidFlip continually given feedback so… check out MidFlip, and give us feedback!

Here we are creating a mental model of how learning occurs. It is a get-in, get-out, don’t-f-about type of model. We skip the low-down explanations of neurons and move straight to representations. How do representations form? how do they interconnect? And how do they contribute to learning?

Int...

• 0

In 1942 Isaac Asimov started a series of short stories about robots. In those stories, his robots were programed to obey the three laws of robotics. Each story was about a situation where the robots acted oddly in order to comply with the three laws.

The three laws:

A robot may not injure a human being or,...

• 2

Intelligence is like fire: incredibly useful but devastating if pointing in the wrong direction.

Some people believe that with intelligence comes morality. But this intuition is generally considered wrong by the AI community. There is no reason to believe that intelligent systems develop morality.

The top theory of why Morality forme...

• 0

Artificial Intelligence is based on optimization. A measurable goal is set, and the system updates to better achieve that goal.

But what if the system over-optimizes? Like the famous hypothetical story of the paperclip maximizer. A system that is told to make paperclips by a paperclip company. The system does so to the absurd extreme...

• 3

Where are the weaknesses in large language models? Do they have predictable failings? Can I (playground bully style) call them glorified auto text, a stochastic parrot, a bloody word calculator!

As an oversimplified summary, Large Language Models are neural networks trained on massive amounts of text data. Given...

• 0

A new primary school student, Maya, enters a new school. Unbeknownst to all, she brings with her a virus. But not just any virus... a mind virus. An idea. An idea that will spread throughout the school and never, ever leave again.

In this article, we will discuss memetics, a discipline in which we treat ideas, or "mem...

• 2

The ASI condom - Or the superintelligence safety wrapper - is a hypothetical set of tools to help contain FOOM events. This is in the hope that we can delay FOOM events until we can be more assured of interpretability and alignment research.

This is a collaborative article. Feel free to make edits, such edits will be voted upon via l...

• 2